By Patricia Ainembabazi

As Artificial Intelligence (AI) reshapes digital ecosystems across the globe, one group remains consistently overlooked in discussions around AI design and governance: Children. This gap was keenly highlighted at the Internet Governance Forum (IGF) held in June 2025 in Oslo, Norway, where experts, policymakers, and child-focused organisations called for more inclusive AI systems that protect and empower young users.

Children today are not just passive users of digital technologies; they are among the most active and most vulnerable user groups. In Africa, internet use among youths aged 15 to 24 was partly fuelled by the Covid-19 pandemic, hence their growing reliance on digital platforms for learning, play, and social interaction. New research by the Digital Rights Alliance Africa (DRAA), a consortium hosted by the Collaboration on International ICT Policy for East and Southern Africa (CIPESA), shows that this rapid connectivity has amplified exposure to risks such as harmful content, data misuse, and algorithmic manipulation that are especially pronounced for children.

The research notes that AI systems have become deeply embedded in the platforms that children engage with daily, including educational software, entertainment platforms, health tools, and social media. Nonetheless, Africa’s emerging AI strategies remain overwhelmingly adult-centric, often ignoring the distinct risks these technologies pose to minors. At the 2025 IGF, the urgency of integrating children’s voices into AI policy frameworks was made clear through a session supported by the LEGO Group, the Walt Disney Company, the Alan Turing Institute, and the Family Online Safety Institute. Their message was simple but powerful: “If AI is to support children’s creativity, learning, and safety, then children must be included in the conversation from the very beginning”.

The forum drew insights from recent global engagements such as the Children’s AI Summit of February 2025 held in the UK and the Paris AI Action Summit 2025. These events demonstrated that while children are excited about AI’s potential to enhance learning and play, they are equally concerned about losing creative autonomy, being manipulated online, and having their privacy compromised. A key outcome of these discussions was the need to develop AI systems that children can trust; systems that are safe by design, transparent, and governed with accountability.

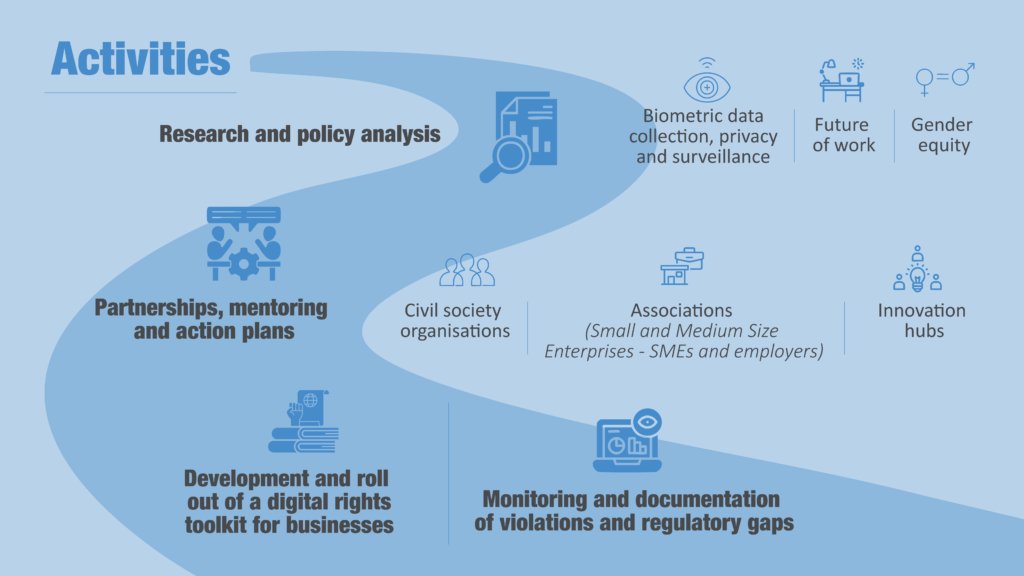

This global momentum offers important lessons for Africa as countries across the continent begin to draft national AI strategies. While many such strategies aim to spur innovation and digital transformation, they often lack specific protections for children. According to DRAA’s 2025 study on child privacy in online spaces, only a handful of African countries have enacted child-specific privacy laws in the digital realm. Although instruments like the African Charter on the Rights and Welfare of the Child recognise the right to privacy, regional frameworks such as the Malabo Convention, and even national data protection laws, rarely offer enforceable safeguards against AI systems that profile or influence children.

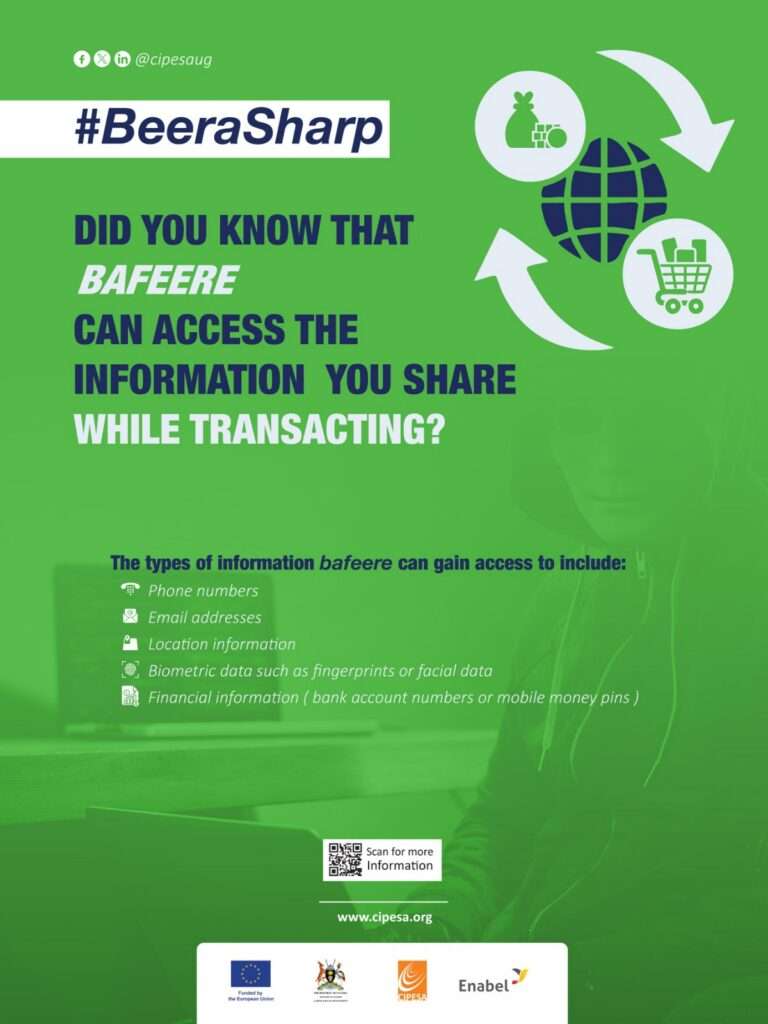

Failure to address these gaps will leave African children vulnerable to a host of AI-driven harms ranging from exploitative data collection and algorithmic profiling to exposure to biased or inappropriate content. These harms can deprive children of autonomy and increase their risk of online abuse, particularly when AI-powered systems are deployed in schools, healthcare, or entertainment without adequate oversight.

To counter these risks and ensure AI becomes a tool of empowerment rather than exploitation, African governments, policymakers, and developers must adopt child-centric approaches to AI governance. This could start with mainstreaming children’s rights such as privacy, protection, education, and participation, into AI policies. International instruments like the UN Convention on the Rights of the Child and General Comment No. 25 provide a solid foundation upon which African governments can build desirable policies.

Furthermore, African countries should draw inspiration from emerging practices such as the “Age-Appropriate AI” frameworks discussed at IGF 2025. These practices propose clear standards for limiting AI profiling, nudging, and data collection among minors. Given that only 36 out 55 African countries currently have data protection laws, with few of them containing child-specific provisions, policymakers must take efforts to strengthen these frameworks. Such reforms should require AI tools targeting children to adhere to strict data minimisation, transparency, and parental consent requirements.

Importantly, digital literacy initiatives must evolve beyond basic internet safety to include AI awareness. Equipping children and caregivers with the knowledge to critically engage with AI systems will help them navigate and question the technology they encounter. At the same time, platforms similar to the Children’s AI Summit 2025 should be replicated at national and regional levels to ensure that African children’s lived experiences, hopes, and concerns shape the design and deployment of AI technologies.

Transparency and accountability must remain central to this vision. AI tools that affect children, whether through recommendation systems, automated decision-making, or learning algorithms, should be independently audited and publicly scrutinised. Upholding the values of openness, fairness, and inclusivity within AI systems is essential not only for protecting children’s rights but for cultivating a healthy, rights-respecting digital environment.

As the African continent’s digital infrastructure expands and AI becomes more pervasive, the choices made today will define the digital futures of generations to come. The IGF 2025 stressed that children must be central to these choices, not as an afterthought, but as active contributors to a safer and more equitable AI ecosystem. By elevating children’s voices in AI design and governance, African countries can lay the groundwork for an inclusive digital future that truly serves the best interests of all.