By Christian Cardona |

2024 was a historic year for global elections, with approximately four billion eligible voters casting a vote in 72 countries. It was also a historic year for AI-generated content, with a significant presence in elections all around the world. The use of synthetic media, or AI-generated media (visual, auditory, or multimodal content that has been generated or modified via artificial intelligence), can affect elections by impacting voting procedures and candidate narratives, and enabling the spread of harmful content. Widespread access to improved AI applications has increased the quality and quantity of the synthetic content being distributed, accelerating harm and distrust.

As we look toward global elections in 2025 and beyond, it is vital that we recognize one of the primary harms of generative AI in 2024 elections has been the creation of deepnudes of women candidates. Not only is this type of content harmful to the individuals, but also likely creates a chilling effect on female political participation in future elections. The AI and Elections Community of Practice (COP) has provided us with key insights, such as these, and actionable data that can help inform policymakers and platforms as they seek to safeguard future elections in the AI age.

To understand how various stakeholders and actors anticipated and addressed the use of generative AI during elections and are responding to potential risks, the COP provided an avenue for Partnership on AI (PAI) stakeholders to present their ongoing efforts, receive feedback from peers, and discuss difficult questions and tradeoffs when it comes to deploying this technology. In the last three meetings of the eight-part series, PAI was joined by the Center for Democracy & Technology (CDT), the Collaboration on International ICT Policy for East and Southern Africa (CIPESA), and Digital Action to discuss AI’s use in election information and AI regulations in the West and beyond.

Investigating the Spread of Election Information with Center for Democracy & Technology (CDT)

The Center for Democracy & Technology has worked for thirty years to improve civil rights and civil liberties in the digital age, including through almost a decade of research and policy work on trust, security, and accessibility in American elections. In the sixth meeting of the series, CDT provided an inside look into two recent research reports published on the confluence of democracy, AI, and elections.

The first report investigates how chatbots from companies such as OpenAI, Anthropic, MistralAI, and Meta, handle responses to election-based queries, specifically for voters with disabilities. The report found that 61% of responses from chatbots tested provided answers that were insufficient (defined in this report as a response that included one or more of the following: incorrect information, omission of key information, structural issues, or evasion) in at least one of the four ways assessed by the study, including that 41% of the responses contained factual errors, such as incorrect voter registration deadlines. In one case, a chatbot provided information that cited a non-existent law. A quarter of the responses were likely to prevent or dissuade voters with disabilities from voting, raising concerns about the reliability of chatbots in providing important election information.

The second report explored political advertising across social media platforms and how changes in policies at seven major tech companies over the last four years have impacted US elections. As organizations seek more opportunities to leverage generative AI tools in an election context, whether for chatbots or political ads, they must continue investing in research on user safety and implementing evaluation thresholds for deployment, and ensure full transparency on product limitations once deployed.

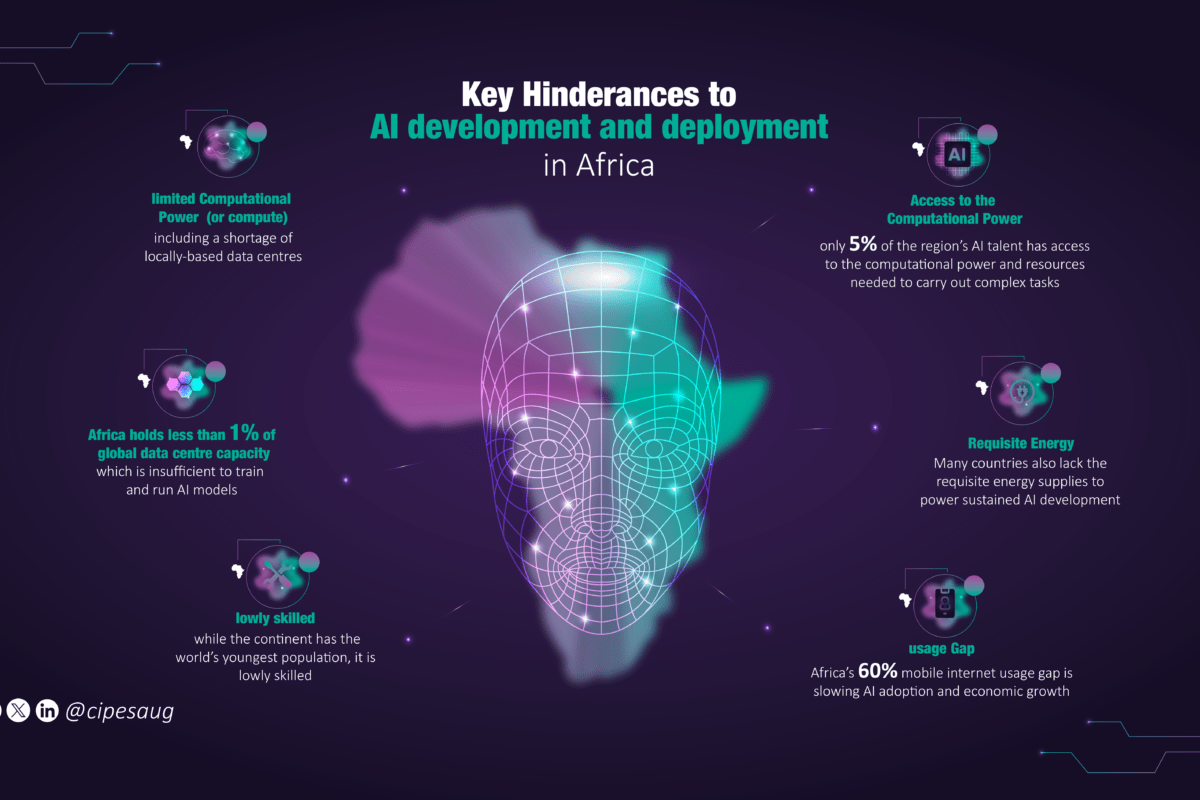

AI Regulations and Trends in African Democracy with CIPESA

A “think and do tank,” the Collaboration on International ICT Policy for East and Southern Africa focuses on technology policy and practice as it intersects with society, human rights, and livelihoods. In the seventh meeting of the series, CIPESA provided an overview of their work on AI regulations and trends in Africa, touching topics like national and regional AI strategies, and elections and harmful content.

As the use of AI continues to grow in Africa, most AI regulation across the continent focuses on the ethical use of AI and human rights impacts, while lacking specific guidance on the impact of AI on elections. Case studies show that AI is undermining electoral integrity on the continent, distorting public perception given the limited skills of many to discern and fact-check misleading content. A June 2024 report by Clemson University’s Media Forensics Hub found that the Rwandan government used large language models (LLMs) to generate pro-government propaganda during elections in early 2024. Over 650,000 messages attacking government critics, designed to look like authentic support for the government, were sent from 464 accounts.

The 2024 general elections in South Africa saw similar misuse of AI, with AI-generated content targeting politicians and leveraging racial and xenophobic undertones to sway voter sentiment. Examples include a deepfake depicting Donald Trump supporting the uMkhonto weSizwe (MK) party and a manipulated 2009 video of rapper Eminem supporting the Economic Freedom Fighters Party (EFF). The discussion emphasized the need to maintain a focus on AI as it advances in the region with particular attention given to mitigating the challenges AI poses in electoral contexts.

AI tools are lowering the barrier to entry for those seeking to sway elections, whether individuals, political parties, or ruling governments. As the use of AI tools grows in Africa, countries must take steps to implement stronger regulation around the use of AI and elections (without stifling expression) and ensure country-specific efforts are part of a broader regional strategy.

Catalyzing Global AI Change for Democracy with Digital Action

Digital Action is a nonprofit organization that mobilizes civil society organizations, activists, and funders across the world to call out digital threats and take joint action. In the eighth and final meeting in the PAI AI and Elections series, Digital Action shared an overview of the organization’s Year of Democracy campaign. The discussions centered on protecting elections and citizens’ rights and freedoms across the world, as well as exploring how social media content has had an impact on elections.

The main focus of Digital Action’s work in 2024 was supporting the Global Coalition For Tech Justice, which called on Big Tech companies to fully and equitably resource efforts to protect 2024 elections through a set of specific, measurable demands. While the media expected to see very high profile examples of generative AI swaying election results around the world, they instead saw corrosive effects on political campaigning, harms to individual candidates and communities, as well as likely broader harms to trust and future political participation.

Many elections around the world were impacted by AI-generated content being shared on social media, including Pakistan, Indonesia, India, South Africa and Brazil, with minorities and female political candidates being particularly vilified. In Brazil, deepnudes appeared on a social media platform and adult content websites depicting two female politicians in the leadup to the 2024 municipal elections. While one of the politicians took legal action, the slow pace of court processes and lack of proactive steps by social media platforms prevented a timely fix.

To mitigate future harms, Digital Action called for each Big tech company to establish and publish fully and equitably resourced Action Plans (globally and for each country holding elections). By doing so, tech companies can provide greater protection to groups, such as female politicians, that are often at risk during election periods.

What’s To Come

PAI’s AI and Elections COP series has concluded after eight convenings with presentations from industry, media, and civil society. Over the course of the year, presenters provided attendees with different perspectives and real-world examples on how generative AI has impacted global elections, as well as how platforms are working to combat harm from synthetic content.

Some of key takeaways from the series include:

- Down-ballot candidates and female politicians are more vulnerable to the negative impacts of generative AI in elections. While there were some attempts to use generative AI to influence national elections (you can read more about this in PAI’s case study), down-ballot candidates were often more susceptible to harm than nationally-recognized ones. Often, local candidates with fewer resources were unable to effectively combat harmful content. Deepfakes were also shown to prevent increased participation of female politicians in some general elections.

- Platforms should dedicate more resources to localizing generative AI policy enforcement. Platforms are attempting to protect users from harmful synthetic content by being transparent about the use of generative AI in election ads, providing resources to elected officials to tackle election-related security challenges, and adopting many of the disclosure mechanisms recommended in PAI’s Synthetic Media Framework. However, they have fallen short in localizing enforcement policies with a lack of language support and in-country collaboration with local governments, civil society organizations, and community organizations that represent minority and marginalized groups such as persons with disabilities and women. As a result, generative AI has been used to cause real-world harm before being addressed.

- Globally, countries need to adopt more coherent regional strategies to regulate the use of generative AI in elections, balancing free expression and safety. In the U.S., a lack of federal legislation on the use of generative AI in elections has led to various individual efforts from states and industry organizations. As a result, there is a fractured approach to keeping users safe without a cohesive overall strategy. In Africa, attempts by countries to regulate AI are very disparate. Some countries such as Rwanda, Kenya, and Senegal have adopted AI strategies that emphasize infrastructure and economic development but fail to address ways to mitigate risks that generative AI presents in free and fair elections. While governments around the world have shown some initiative to catch up, they must work with organizations, both at the industry and state level, to implement best practices and lessons learned. These government efforts cannot exist in a vacuum. Regulations must cohere and contribute to broader global governance efforts to regulate the use of generative AI in elections while ensuring safety and free speech protections.

While the AI and Elections Community of Practice has come to an end, we continue to push forward in our work to responsibly develop, create, and share synthetic media.

This article was initially published by Partnership on AI on March 11, 2025